Unlearning is the re-training of a model to remove some knowledge so that it is not capable of responding to it.

It might be motivated by:

- legal necessity (e.g. right to be forgotten)

- alignment (e.g. prevent dangerous knowledge from being spread)

- general training (e.g. preventing very common data from being memorized)

Benchmarks for unlearning:

Techniques for unlearning

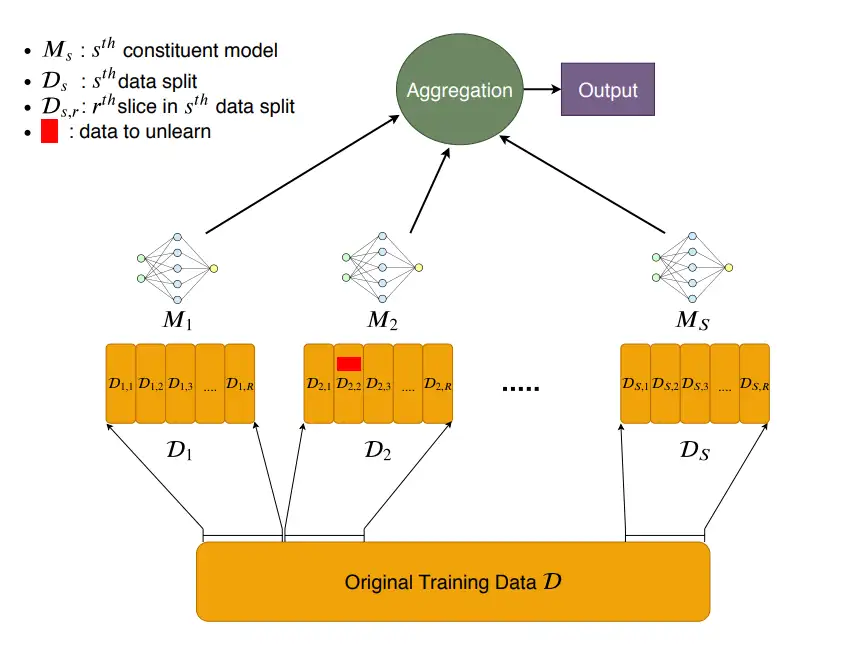

SISA3

Sharded, Isolated, Sliced, and Aggregated Training: Trains the original model in smaller composable models through ensembling. The data is partitioned across these individual models.

Differential Privacy4

Applies noise and clips L2 Norm of per-example gradients for specific parts of the data that wants to be unlearned. The great benefit of this is that it provides a quantifiable demonstration of the unlearning happening, and reduces the impact of the model, as long as the differential (called ) are kept low enough to show that impact. This demonstrates the distributional closeness between the original model and the unlearned model, which would be (ideally) equivalent.

Liu’s article5 covers the details of several approaches under differential privacy used for the Unlearning Challenge.

Just ask

Some approaches just ask the model to pretend not to know about a subject with good results.6 this can even take the shape of in-context unlearning7, so that the model negates the weights of the provided examples. However, this does not impact the model and is not feasible for large datasets where lots of examples need to be unlearned.

Unlearning Challenge

In 2023, NeurIPS hosted a Machine Unlearning Challenge8

Footnotes

-

Weapons of Mass Destruction Proxy (WMDP) benchmark, Li et al ↩

-

TOFU: A Task of Fictitious Unlearning for LLMs, Maini et al ↩

-

Machine Unlearning. Bourtoule et al. ↩

-

Deep Learning with Differential Privacy. Abadi et al. ↩

-

Liu, Ken Ziyu. (May 2024). Machine Unlearning in 2024. Stanford Computer Science. https://ai.stanford.edu/~kzliu/blog/unlearning. ↩

-

Guardrail Baselines for Unlearning in LLMs, Thaker et al ↩

-

In-Context Unlearning: Language Models as Few Shot Unlearners, Pawelczyk, Neel, Lakkaraju ↩